#Extract standard deviation from stock yearly

#Go to link to extract data

#https://finance.yahoo.com/quote/FB/history?p=FB&.tsrc=fin-tre-srch

#After that apply this formula to all H column =(C1/B1 - 1) * 100 and

#this to I column =(1 - D1/B1 ) * 100

#reason is to determine percentage variation. Next project automate this with python

#Your data should look like image below.

#facebook_2019

import pandas as pd #import libraries

import matplotlib as plt

#code below is from other year

#Before opening the excel, erase the headlines(e.g Date, open, .....) as it could

#cause problems to extract data. I will come back to reduce this manual job. hopefully with code.

# Procceed to the path of your csv (comma separated values or excel

path = "FB_2019.csv" # This code redirects to path of file,

df = pd.read_csv(path, header=None)

headers= ['date','open','High','low','close','Adj Close','Volume', 'range_high', 'range_low']

df.columns = headers

data2019 = df[['date','open','High','low','close','Adj Close','Volume','range_high', 'range_low']].describe()

#code above replace'date' or 'open' by 'low' or any other parameter in headers

print('data2019')

print(data2019)

year_of_selection = df[['open']]

import matplotlib.pyplot as plt

plt.plot(year_of_selection)

plt.ylabel('prices 2019')

plt.show()

#You could choose any column you want by modifying data 2019

Thursday, July 25, 2019

Friday, May 31, 2019

Regression model of highway-mpg and price

#The code below is for a linear regression between miles per gallon(mpg) and price of vehicle

#just to remind you a # (hash) is used to comment then I will comment the code below within the #

#import libraries

import pandas as pd #useful to open csv comma separated values documents

import numpy as np #useful to classify data

import matplotlib.pyplot as plt #useful to graph

import seaborn as sns #useful to draw statistical data https://seaborn.pydata.org/

# path of data

path = 'https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/DA0101EN/automobileEDA.csv'

#Code below reads and organizes the csv file with the panda library

df = pd.read_csv(path)

df.head()

# Create object

from sklearn.linear_model import LinearRegression

lm = LinearRegression()

lm

X = df[['highway-mpg']] #this creates two list one with indexes and the other with highway-mpg

Y = df['price']

#determines the width of the screen of the graph

width = 12 #determines the width of the screen of the graph

height = 5

#code below graphs the data from the csv with matplotlib as plt and seaborn as sns

plt.figure(figsize=(width, height))

sns.regplot(x="highway-mpg", y="price", data=df)

plt.ylim(0,)

correlation = df[["peak-rpm","highway-mpg","price"]].corr()

print(str(correlation)+ ' this is correlation between peak-rpm,highway-mpg,price')

#We could conclude that the slope is negative. The prediction would be within that line and the range #of the MSE

#Below is the residual

#The residual helps you to determine the accuracy of the predictor

width = 12

height = 10

plt.figure(figsize=(width, height))

sns.residplot(df['highway-mpg'], df['price'])

plt.show()

Tuesday, May 28, 2019

Ecuador Oil revenue

The other data for estimates of revenue were obtained from https://contenido.bce.fin.ec/documentos/Estadisticas/Hidrocarburos/ASP201712.pdf an official government source.

The data obtained an average of production daily as data is not available or it was not found for this data analysis.

The average of daily production was set at 543'095.9 oil barrels multiplied by the price of WTI oil.

2015 analysis.

The labels are as follows:

date price open high low vol change

2016 analysis

date price open high low vol change

2017 analysis

date price open high low vol change

2018 analysis

date price open high low vol change

This method results in a very accurate estimation of actual revenues which are seen in the first chart.

This data is very important as oil is one of the main revenue and makes a big part of the economy. For prediction of data please visit Predicting data and other topics in this blog.

Saturday, May 25, 2019

Getting and Drawing data

Getting Data

I really love data from an early age. I remember the time my dad take me to a store where I found the

almanac of the world that was back then in 2005. That was a whole discovery. This introduces me into the question about getting the best data. An Almanac relies on official sources but where is the best place to find the best data. I write this post from Canada and there is several sources for information. Depending on your needs you could begin your research with the official statistics of your country.

Begin your data search in official sources like the statistics site of your city, or country.

You could also look academic sources like google scholar, Pubmed and research gate.

Look for available databases of universities as well.

I will write more on this in the future.

Drawing Data

To begin with you could use excel to have a better idea. The data below shows the histogram of the salaries in Toronto.

To open this file in python

>>> file = open('data.csv', r) # this creates the file with the data of a 'data.csv' document in python

>>> file.readline() #This prints the first line of the document

file = open('stats0.csv', 'r')

while input()!= 'end': #this code lets you see how the data is processed by clicking enter

a = file.readline()

print(a)

The data is not processed yet.

Read other tutorials to see how to manipulate data and prepare data.

Tuesday, May 21, 2019

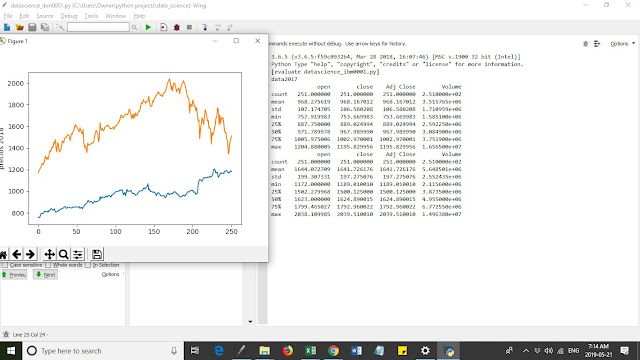

Extract data from stocks yearly and compare to find patterns

The code below is a summary of a year analysis in stock.

You could extract the data from yahoo finance.

(Go to https://help.yahoo.com/kb/SLN2311.html for more info).

Then you put the data in the same folder of your code.

Download the data from two years (e.g 2018, 2019) and erase the headlines (e.g header, open, close)

to allow easy process of the data.

#datascience_0001 import pandas as pd #import libraries import matplotlib as plt path = "amazon2017.csv" #define the location of your document in the computer df = pd.read_csv(path, header=None) headers= ['date','open','High','low','close','Adj Close','Volume']

# code above erase headers to avoid problems, then add them as you decide df.columns = headers data2017 = df[['date','open','close','Adj Close','Volume']].describe()

# This code above gives you a summary of the column

print('data2017') print(data2017) #print the summary of data defined in data2017 primero = df[['open']] import matplotlib.pyplot as plt plt.plot(primero) plt.ylabel('prices 2017') plt.show() #code below is from other year

path = "amazon2018.csv" # The code is the same as above,

#but changes only the path to document

df = pd.read_csv(path, header=None) headers= ['date','open','High','low','close','Adj Close','Volume'] df.columns = headers data2018 = df[['date','open','close','Adj Close','Volume']].describe()

#code above replace'date' or 'open' by 'low' or any other parameter in headers

print(data2018) a = df[['open']] import matplotlib.pyplot as plt plt.plot(a) plt.ylabel('prices 2018') plt.show()

#The output of code is shown below.'open' and 'close' prices were chosen, also 'volume'.

#You could choose any column you want by modifying data 2018

Possible errors

>builtins.FileNotFoundError: File b'amazon2017.csv' does not exist

solution:

Download the data and open it. Save in the desktop or the same folder

that you are working in with your python program.

Thursday, May 16, 2019

extract data from stocks and graph it

# import libraries

import requests

import time

import http

from bs4 import BeautifulSoup

from datetime import date

import matplotlib.pyplot as plt

import json

jours = []

prix = []

def duplicate_quote ( x= str):

"""If it founf=ds onw quote it duplicates it

>>>duplicate_quote ("{'Thu Jan 24 04:28:23 2019': 52.64}" )

>>>('{"Thu Jan 24 04:28:23 2019": 52.64}' )

"""

if "'" in x:

j = x.replace("'", '"')

return j

#2 lineas codigo debajo vrean file primero

f1 = open('us_cad.txt', 'a')

f1.close

f1 = open('us_cad.txt', 'r')

texto = f1.read()

#texto

#"{'Thu Jan 24 04:28:23 2019': 52.64}"

#code below reverses the code and find the '{' tehn we get the last dicitonary we substract 1 to include last character becasue of slincing

if texto != '':

new_dic = texto [-(texto[::-1].find('{')) - 1 : ]

duplicate_quote(new_dic)

dic = json.loads(duplicate_quote(new_dic))

f1.close()

else:

dic = {}

precio = []

f1.close()

#time.ctime()

#'Thu Jan 24 03:51:29 2019'

today = date.today()

today

days = []

quote_page = 'http://30rates.com/usd-cad-forecast-canadian-dollar-to-us-dollar-forecast-tomorrow-week-month'

# query the website and return the html to the variable ‘page’

page = requests.get(quote_page)

soup = BeautifulSoup(page.content, 'html.parser')

# Take out the <div> of name and get its value

name_box = soup.find('strong')

name = name_box.text

print(name)

precio = []

precio.append(float(name))

days = []

days.append(today.strftime("%d/%m/%y"))

dias = []

dias.append(time.ctime())

#plt.plot(dias, precio)

#plt.plot([1,2,3], [3,4,5])

for i in range(len(dias)):

dic[dias[i]] = precio[i]

with open('us_cad.txt', 'w') as f:

f.write(str(dic))

f.close

for i in dic:

jours.append(i)

prix.append(dic[i])

plt.plot(jours, prix)

import requests

import time

import http

from bs4 import BeautifulSoup

from datetime import date

import matplotlib.pyplot as plt

import json

jours = []

prix = []

def duplicate_quote ( x= str):

"""If it founf=ds onw quote it duplicates it

>>>duplicate_quote ("{'Thu Jan 24 04:28:23 2019': 52.64}" )

>>>('{"Thu Jan 24 04:28:23 2019": 52.64}' )

"""

if "'" in x:

j = x.replace("'", '"')

return j

#2 lineas codigo debajo vrean file primero

f1 = open('us_cad.txt', 'a')

f1.close

f1 = open('us_cad.txt', 'r')

texto = f1.read()

#texto

#"{'Thu Jan 24 04:28:23 2019': 52.64}"

#code below reverses the code and find the '{' tehn we get the last dicitonary we substract 1 to include last character becasue of slincing

if texto != '':

new_dic = texto [-(texto[::-1].find('{')) - 1 : ]

duplicate_quote(new_dic)

dic = json.loads(duplicate_quote(new_dic))

f1.close()

else:

dic = {}

precio = []

f1.close()

#time.ctime()

#'Thu Jan 24 03:51:29 2019'

today = date.today()

today

days = []

quote_page = 'http://30rates.com/usd-cad-forecast-canadian-dollar-to-us-dollar-forecast-tomorrow-week-month'

# query the website and return the html to the variable ‘page’

page = requests.get(quote_page)

soup = BeautifulSoup(page.content, 'html.parser')

# Take out the <div> of name and get its value

name_box = soup.find('strong')

name = name_box.text

print(name)

precio = []

precio.append(float(name))

days = []

days.append(today.strftime("%d/%m/%y"))

dias = []

dias.append(time.ctime())

#plt.plot(dias, precio)

#plt.plot([1,2,3], [3,4,5])

for i in range(len(dias)):

dic[dias[i]] = precio[i]

with open('us_cad.txt', 'w') as f:

f.write(str(dic))

f.close

for i in dic:

jours.append(i)

prix.append(dic[i])

plt.plot(jours, prix)

Wednesday, May 15, 2019

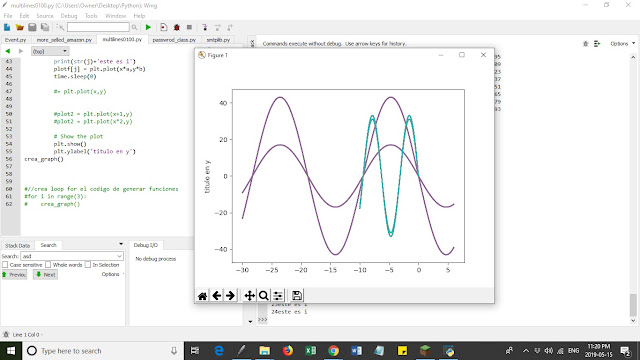

Generating random drawings with random module

To generate random graphs we need first to thinks about the range of the random functions.

(If you are new to the random module read this paragraph, otherwise follow to the next one) -- >

New to random read here. You just go to python interpreter and press

>>> import random.

if it is not installed you go to command prompt in Windows ad type python -m pip install

then proceed to install the remaining packages.

#install necessary packages

import matplotlib.pyplot as plt

from matplotlib import pyplot

import numpy as np

import random

import time

$this line determine how many lines you need

NUMERODELINEAS = 4

def crea_graph():

# Create the vectors X and Y

plotf = []

for i in range(NUMERODELINEAS):

plotf.append('plot%d,' %i)

print('this is i0' + str(i))

for i in range(10):

time.sleep(0)

print('this is i1 ' + str(i))

a = random.randint(-50,50)

b = random.randint(-55, 55)

print('this is a ' + str(a) + ' and b ' + str(b))

x = np.arange((a+1), 10 , 0.01)

print('este es super ' + str(x))

y = b+np.sin(x)

#for i in range(4):

# print("plot{}".format(i))

# for i in range(4):

# print('plot%d,' %i)

# genera random para hacer la curva de sin o onda mas amplia (c = random.randrange)

c = random.randrange(-5,7)

print('this is c random' + str(c))

for j in range(NUMERODELINEAS):

print(str(j)+'este es i')

plotf[j] = plt.plot(a+x*c,c*y)

print('this is j' + str(j))

time.sleep(0)

#= plt.plot(x,y)

#plot2 = plt.plot(x+1,y)

#plot2 = plt.plot(x*2,y)

# Show the plot

plt.show()

plt.ylabel('titulo en y')

crea_graph()

d = random.randint(0, 7)

#//crea loop for el codigo de generar funciones

for i in range(d):

crea_graph()

(If you are new to the random module read this paragraph, otherwise follow to the next one) -- >

New to random read here. You just go to python interpreter and press

>>> import random.

if it is not installed you go to command prompt in Windows ad type python -m pip install

then proceed to install the remaining packages.

#install necessary packages

import matplotlib.pyplot as plt

from matplotlib import pyplot

import numpy as np

import random

import time

$this line determine how many lines you need

NUMERODELINEAS = 4

def crea_graph():

# Create the vectors X and Y

plotf = []

for i in range(NUMERODELINEAS):

plotf.append('plot%d,' %i)

print('this is i0' + str(i))

for i in range(10):

time.sleep(0)

print('this is i1 ' + str(i))

a = random.randint(-50,50)

b = random.randint(-55, 55)

print('this is a ' + str(a) + ' and b ' + str(b))

x = np.arange((a+1), 10 , 0.01)

print('este es super ' + str(x))

y = b+np.sin(x)

#for i in range(4):

# print("plot{}".format(i))

# for i in range(4):

# print('plot%d,' %i)

# genera random para hacer la curva de sin o onda mas amplia (c = random.randrange)

c = random.randrange(-5,7)

print('this is c random' + str(c))

for j in range(NUMERODELINEAS):

print(str(j)+'este es i')

plotf[j] = plt.plot(a+x*c,c*y)

print('this is j' + str(j))

time.sleep(0)

#= plt.plot(x,y)

#plot2 = plt.plot(x+1,y)

#plot2 = plt.plot(x*2,y)

# Show the plot

plt.show()

plt.ylabel('titulo en y')

crea_graph()

d = random.randint(0, 7)

#//crea loop for el codigo de generar funciones

for i in range(d):

crea_graph()

Subscribe to:

Comments (Atom)